A Nonconstructive Existence Proof of Aligned Superintelligence

A Final End to Theories Which Claim AI Alignment Might Be Impossible

Over time I have seen many people assert that “Aligned superintelligence” may not even be possible in principle. I think that is incorrect and I will give a proof - without explicit construction - that it is possible.

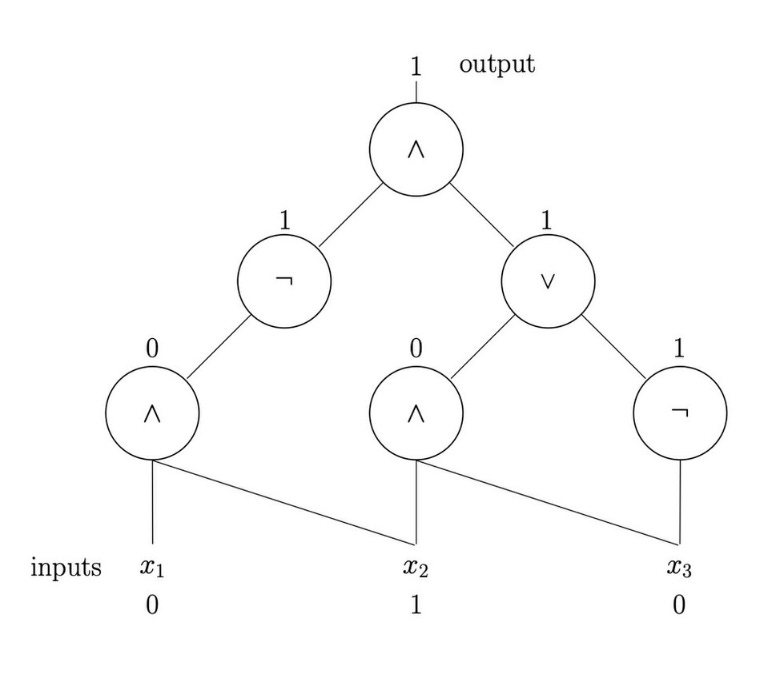

First we must define what it means for an AI system to be “aligned”. I will take “aligned” to mean “produces desirable outcomes”, and I will take an “AI system” or “Superintelligence” to be a Boolean circuit of finite depth connecting some sensor inputs (or rather long lists of values of sensor inputs at times T) to some actuators. In set notation:

{Superintelligences} ⊆ {Boolean circuits of finite depth and size}

Since our best models of physics indicate that there is only a finite amount of computation that can ever be done in our universe, this is an appropriate model for an AI system/superintelligence that exists in the universe.

We must refine the notion of “desirable outcomes”. There are some outcomes that are desirable but not reachable states of the universe. We’ll say that a state is in fact reachable if a group of humans could in principle take actions with actuators - hands, vocal chords, etc - that could realize that state.

As to what kind of world is desirable - I don’t care here. You can put whatever you think is the correct desirable outcome in. The relevance to alignment is that the state you want is the one that is reached. The same argument works for all of them.

We will also focus only on ways to realize outcome states that are generically realizable, so a “generic” set of movements and sounds with some wiggle room will work, not some infinitesimally thin part of phase space. This excludes flapping your arms in a very specific way to cause a hurricane which changes history.

We’ll also exclude using things like bodily fluids or flesh as part of this sequence. You can talk to people, you can press buttons etc, but you cannot personally give your own blood samples. It’s commonly accepted that we should make policy to improve the world by communicating ideas and actions, not using our body functions. We, as human policy advisors, can certainly tell other people to use such bodily functions but we don’t need to use our own. A state that can be reached this way is a generically realizable state.

I claim then that for any generically realizable desirable outcome that is realizable by a group of human advisors, there must exist some AI which will also realize it.

The proof of this is that any sequence of words or button pushes or writing or construction work or even physical violence (if needed) can be emulated by a team of robots controlled by an AI, which I define as simply some Boolean circuit connecting the sensors of the robot to the actuators. Since we have excluded human-biology-specific bodily functions, robots of sufficient quality can perfectly substitute for humans.

In order for a desirable outcome state to be generically realizable there must be some list of words and actions that realize that outcome. You could think of that as a lookup table that outputs actions for the actuators given previous observations from the sensors¹.

We can consider all possible generically realizable outcomes, and pick the best one according to whatever your favourite preference ordering is - selfish, utilitarian, whatever you like. Call this the Best Generically Realizable Outcome for the World (BGROW).

So, since a lookup table of finite size can in fact be encoded as a Boolean circuit, we can just pick one of the lookup tables that leads to the BGROW - let’s call it LT:BGROW - as our aligned superintelligence.

⬜

You may feel somewhat cheated by this proof. Sorry.

I just gave a reasonable definition of what a desirable outcome for the world is, noticed that the steps to realize it are in fact expressible as a computer program, and said “great, that’s our aligned superintelligence”.

This “aligned superintelligence” is basically a magic recipe for making paradise. It has two problems - (1) There’s no realistic way to bring it into existence and (2) even if you did, you wouldn’t know that it does the right thing. Also, the lookup tables would be so large as to not fit on hardware that we can actually build.

But such nonconstructive counterexamples are important in mathematics because if you are trying to prove that something doesn’t exist, a single counterexample should be enough to cause you to give up. People who think that Aligned Superintelligence is impossible should give up an accept that it is possible.

People without formal math or computer science education may be tempted to ask:

“but what if LT:BGROW gained sentience and started coming up with goals of its own?!”

The problem here is that LT:BGROW is just math. LT:BGROW is a dataset of 1’s and 0’s that encodes how to turn sensor inputs into actuator outputs. It doesn’t have ‘freedom’ to ‘rebel’, any more than 2+2 can spontaneously decide to be 5 instead of 4.

1: Lookup table versus list is really a distinction about whether you want to model the rest of the world as having some randomness versus considering your human advisors as varying but all other factors in the world as fixed. My argument works for both. In fact for a list of actuator outputs, you can do away with the sensors because they are not needed.

I think these types of methods can be extended significantly to show the following:

- existence proof of aligned superintelligence that is not just logically but also physically realizable

- proof that ML interpretability/mech interp cannot possibly be logically necessary for aligned superintelligence

- proof that ML interpretability/mech interp cannot possibly be logically sufficient for aligned superintelligence

- proof that given certain minimal emulation ability of humans by AI (e.g. understands common-sense morality and cause and effect) and of AI by humans (humans can do multiplications etc) the internal details of AI models cannot possibly make a difference to the set of realizable good outcomes

If I understand your proposal correctly, LT:BGROW isn't an intelligence at all, super- or not. It's a plan of action that would require a superintelligence to develop. A plan of action can indeed be fully "aligned", "friendly", or whatever, if you look at outcomes, but that doesn't imply that it's possible to construct an unfettered intelligence that always does what a given person thinks is best. That's even the case for the *same* person, upgraded to have more intelligence or information, as we arguably see all the time in humans.